Abstract

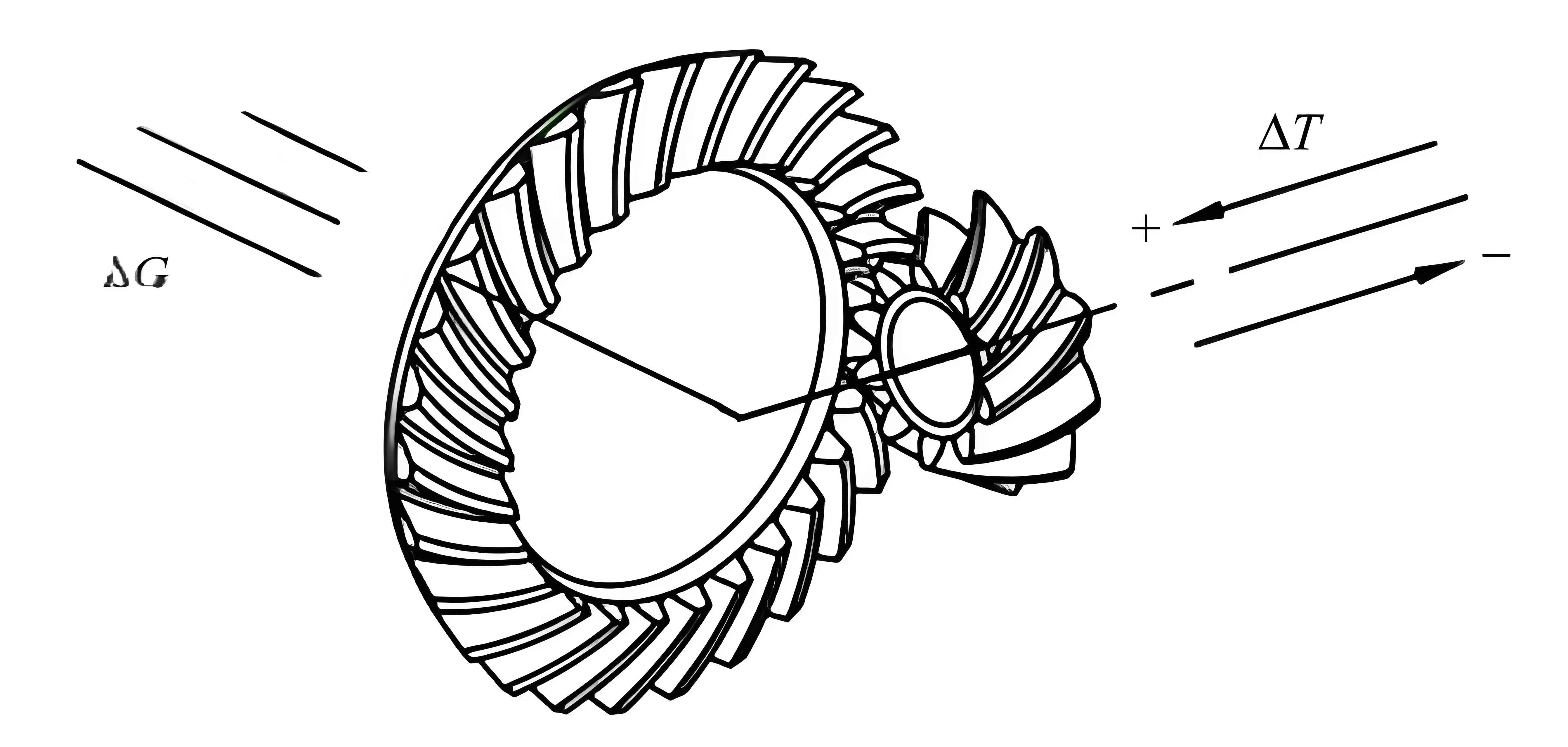

Spiral bevel gear is widely used in high-speed, heavy-duty, and high-precision mechanical transmission structures in the aerospace field due to their smooth transmission, high bearing capacity, low noise, and compact structure. The position, size, and direction of the contact pattern reflect various comprehensive errors of the gear, directly affecting the transmission quality, bearing capacity, and vibration noise. Currently, the “color paste method” is still used for manual detection in production practices. Although it enables quantitative analysis, it suffers from subjectivity, labor intensiveness, and low flexibility and efficiency. This thesis studies the recognition and detection methods of spiral bevel gear contact patterns based on machine vision, image processing, and deep learning technologies. It verifies the fully automated detection process of spiral bevel gear tooth surface contact patterns: image acquisition, three-dimensional reconstruction, point cloud processing, and parameter measurement. The proposed method can significantly improve detection accuracy and efficiency, providing a new technique and method for evaluating the surface quality of spiral bevel gear with high application value.

1. Introduction

1.1 Source of the Research Topic

This master’s thesis topic originates from the National Key Research and Development Program project: Demonstration and Application of Key Technologies for High-Performance Bevel Gear Transmission (2020YFB2010200).

1.2 Research Background and Significance

Spiral bevel gear is employed in various fields such as automobiles, engineering equipment, and agricultural machinery, particularly occupying a crucial position in aviation, navigation, and precision machine tools due to their smooth transmission, high bearing capacity, low noise, and compact structure. During the transmission process of spiral bevel gear pairs, contact patterns are generated on the gear surfaces due to friction. The position, size, and shape of these contact patterns significantly impact the transmission quality, efficiency, bearing capacity, and vibration noise of the gear.

The assembly quality of spiral bevel gear significantly influences the performance of the transmission system. Contact patterns reflect various comprehensive errors of the bevel gear pair, including pressure angle, helix angle, tooth surface pitch line curvature, and surface roughness, serving as a direct indicator of gear operation conditions. The most effective method to confirm gear pair quality is to inspect the contact pattern area after gear meshing.

Currently, the parameter measurement of spiral bevel gear contact patterns relies on manual detection using the color paste method. In this process, a thin layer of red dan powder or contact pattern paint is uniformly sprayed on the adjacent teeth of an active gear mounted on a rolling inspection machine. After operation, part of the powder on the tooth surface is wiped off due to contact friction, leaving a bright contact area. The non-contact area retains the sprayed powder, thus determining the contact pattern region. After meshing transmission and rolling inspection, the spiral bevel gear is removed, and the contact pattern is taped to the target tooth surface. The taped contact pattern is then used for geometric parameter measurement.

This method, which relies on tape for color paste recording and ruler measurement combined with personal experience, provides quantitative analysis but has significant limitations in operation. It is highly subjective, labor-intensive, and lacks flexibility and efficiency. Specifically, the boundary of the gear contact pattern is often fuzzy with a certain width, and the measurement of the distance from the edge is influenced by human judgment, depending on the operator’s experience. Moreover, traditional paper records cannot support digital comparison, verification, and archiving of contact patterns.

Due to the issues of boundary morphology diversity, inaccurate manual measurement and judgment, inability to achieve electronic record archiving, and assembly quality evaluation using the traditional color paste method for spiral bevel gear, there is an urgent need to develop a precise and efficient technology and system for recognizing and detecting spiral bevel gear contact patterns to meet production needs.

1.3 Domestic and International Research Status and Analysis

With the improvement of human intelligence, the demand for three-dimensional (3D) information acquisition of targets is increasing, leading to rapid development in image recognition and 3D reconstruction technologies.

3D Reconstruction utilizes computers to describe and process targets or scenes, restoring their 3D information. It is a crucial research topic in machine vision. 3D reconstruction technology has broad application prospects in fields such as robot autonomous navigation, driverless vehicles, non-contact 3D measurement, reverse engineering, medical imaging, security, traffic monitoring, virtual reality, and human-computer interaction. The demand for 3D reconstruction accuracy, usage environment, and miniaturization is also becoming increasingly urgent.

Among the various methods, binocular stereo vision and structured light are the most representative. Binocular stereo vision utilizes two cameras to perceive the surrounding environment, mimicking human vision. It is a significant branch of computer vision and a highly focused research area. A binocular stereo vision 3D reconstruction system offers advantages such as non-contact operation, simple and flexible structure, large working range, and real-time capability, suitable for various indoor and outdoor environments. However, current binocular stereo vision technology relies heavily on the texture characteristics of the target surface and scene, failing to accurately extract areas with missing or weak textures, resulting in sparse effective feature points. Due to the presence of numerous repeated images, efficient 3D image matching is difficult to achieve, with a high error rate. To obtain high-density 3D reconstruction, global stereo matching algorithms such as dynamic programming, graph cuts, and belief propagation are required, but these algorithms have high complexity, large computational loads, and poor real-time performance, significantly limiting the use of binocular stereo vision.

Structured light methods actively project patterns with certain distribution or encoding information onto the measured object, capture and analyze the modulated structured light patterns, and then obtain the depth information of the measured object based on the triangulation principle. Structured light methods do not require high measurement accuracy for the texture characteristics of the measured object and can use various structured light patterns in different application scenarios. However, structured light is susceptible to interference from ambient light, has a short working distance, is unsuitable for outdoor applications, and involves complex manufacturing processes and high costs. Additionally, the calibration between the structured light projector and camera is difficult.

3D measurement can be divided into contact and non-contact methods, each with its advantages and disadvantages, as shown in Table 1. In recent years, with the rapid development of computer vision technology and image processing technology, as well as the urgent needs of engineering applications, domestic and foreign scholars have proposed a series of new non-contact 3D measurement methods with independent intellectual property rights. Based on whether active illumination light sources are used during the measurement process, optical 3D measurement techniques can be classified into passive and active measurement, as illustrated in Figure 1.

| Measurement Method | Advantages | Disadvantages |

|---|---|---|

| Contact Measurement | High accuracy | Slow measurement speed |

| Easily damages the measured object | ||

| Non-contact Measurement | Fast measurement speed | High computational complexity |

| No damage to the measured object | Large system error |

Table 1: Comparison of Contact and Non-contact Measurement Methods

1.3.1 Stereo Vision

Stereo vision is a technology with an early start and widespread research and application. In the 1950s, when computer vision research was in its infancy, many researchers began focusing on the analysis and interpretation of characters, microscopic images, and aerial photographs, emphasizing the analysis, recognition, and understanding of 2D images.

Binocular vision obtains depth information by acquiring images from two cameras at different angles to calculate the parallax between the images, thereby restoring the 3D shape of the target object surface. The implementation process of this method primarily consists of four steps: binocular camera calibration, stereo rectification, stereo matching, and 3D reconstruction. Among them, binocular camera calibration mainly corrects the left and right cameras simultaneously to obtain calibration parameters, including internal and external camera parameters and the positional relationship between the cameras. The matching cost between left and right images is calculated through stereo matching, followed by cost aggregation to compute the parallax values of the left and right images. In 3D reconstruction, the feature corresponding points of the left and right images are calculated based on the parallax principle to convert them into target depth information, and the point cloud data is optimized to achieve the acquisition of the target’s 3D shape.

Although binocular vision is widely used in industrial measurement due to its high measurement accuracy, it also has disadvantages such as complicated feature matching steps, slow measurement speed, and high equipment configuration costs.

Monocular vision refers to the use of a single camera to capture multiple images from multiple angles, and depth information of the object is obtained through image analysis. Due to its low equipment cost, fast 3D point cloud reconstruction, and ease of implementation, monocular vision has received significant attention from many scholars and experts in recent years. In monocular vision, methods based on shape recovery have achieved significant development, with shadow method and texture method being the most prominent. The shadow method first constructs the optical reflection model of the target and then establishes the relationship between the grayscale of the target and the height of the corresponding point, recovering the 3D shape of the target object by calculating the target object’s height. However, obtaining the target’s height information faces the issue of non-uniqueness of the solution. The texture method recovers the height of the target object based on the size and shape of the texture elements on the target surface, thereby reconstructing the 3D shape of the target object’s surface. However, there are still deficiencies in the surface reconstruction of low-texture surfaces.

Monocular vision measurement devices are simple and easy to measure, but they have high requirements for the calibration process and the placement accuracy of the instruments. Due to factors such as instrument placement errors, lens distortions, and lens aberrations, precise measurements are difficult to achieve unless a plane calibration method is used, which typically requires the manufacture of very high-precision dedicated calibration objects.

In recent years, domestic and foreign researchers have conducted extensive research on various stereo vision methods and achieved fruitful research results. The core content of binocular vision is stereo matching, and different matching algorithms produce varying matching effects. Li Chao from Ocean University of China proposed a new method for 3D reconstruction of underwater environments based on parallel binocular vision, combining the Harris and SIFT algorithms to uniformly extract feature points throughout the image. By setting an appropriate threshold, a sufficient number of matched feature points can be obtained. Additionally, feature descriptors are used to describe feature points, effectively solving the issue of low matching efficiency due to consistent image features between the two images. Zhang Haibo et al. from Xi’an Technological University used the SIFT algorithm to extract and describe feature points from two images and proposed an improved KD-tree algorithm to search for feature points, improving the efficiency of feature point matching. Based on this, the random sample consensus (RANSAC) algorithm was used to purify the matching pairs, thereby obtaining more precise feature point matching pairs. Liu Jia from Shenyang Industrial University introduced a scale parameter into the image transformation to enhance scale invariance and replaced the SIFT operator with a new Harris operator to detect corner points in the image. After obtaining the feature vectors, image matching was achieved. This algorithm not only shortens the calculation time but also effectively improves the calculation accuracy and robustness.

Wang Daidong and Yuan Kai et al. improved the SIFT algorithm and proposed corresponding image matching algorithms for specific environmental scenarios. Wu et al. proposed a new non-contact, non-invasive stereo vision method for measuring the dynamic vibration response of mine hoisting ropes. This method combines image processing algorithms and the 2D-DIC algorithm, simplifying the stereo matching process for target objects in two synchronized image sequences. Bao et al. used a novel Canny-Zernike joint algorithm to quantitatively evaluate the crack width on the surface of concrete structures, avoiding the step of attaching a ruler to the concrete surface for measurement unit conversion compared to monocular vision.

The above-mentioned improved feature matching algorithms can achieve good results in feature extraction and image matching for most complex background images but do not perform significantly in low-texture regions. Therefore, improving these algorithms remains an urgent problem to be solved.

Regarding the issue of low matching rates for low-texture images in existing image matching algorithms, Zhang Ying from Shanghai Normal University proposed two methods: eight-channel belief propagation matching and segmentation-based stereo matching, and added pixels with higher confidence levels to improve matching accuracy. Additionally, a mean shift-based color segmentation algorithm was proposed to segment the color information of the reference image. Regions with consistent colors were extracted, and the matching results in these regions were optimized to finally obtain a more precise disparity result map. The results show that this method has a better matching effect for discontinuous and weakly textured regions in images but also has certain drawbacks. When using this method for color segmentation, it is assumed that the depth is also continuous in regions with consistent colors, which may introduce mismatches during the optimization process, especially in regions with rich color variations. Cao Libo from Chongqing University of Technology proposed an ORB feature point detection and matching algorithm that can effectively match massive image features under conditions of lacking texture information. By increasing the extraction and removal of key points, registration is performed based on the retained key points, improving the speed of 3D point cloud registration. Furthermore, the nearest neighbor algorithm is fused with the iterative closest point (ICP) algorithm to optimize the error of the registered point cloud, and the KD-tree technique is used to accelerate point cloud calculations, simultaneously improving matching accuracy and speed. Ye et al. proposed a new point-like structured light encoding strategy based on a time-modulated vertical-cavity surface-emitting laser (VCSEL) array, which encodes time dimensions into structured light, improving stereo matching accuracy while simplifying the algorithm. Wang et al. proposed an improved BM stereo matching algorithm that can quickly obtain the disparity map of edge points. Finally, the least squares method is used to fit the coordinates of edge points, accurately and efficiently realizing the real-time detection of pipe inner diameters.

Compared to binocular vision, monocular vision has a simpler structure, lower cost, lower requirements for the space and distance required for shooting, and no need for prior camera calibration. Therefore, many experts and scholars have conducted in-depth research on monocular vision technology.

Zhong Zhi, Zheng Yuntian, et al. from Harbin Engineering University proposed an experimental design method for underwater target 3D reconstruction based on monocular vision, using a monocular camera for multi-view surrounding shooting to obtain the original dataset of underwater target objects. The PMVS (Patch-based Multi-view Stereo) algorithm is utilized for dense point cloud reconstruction of sparse point clouds. An SIFT-HC feature matching algorithm is proposed to fuse color information during grayscale conversion of color images, effectively increasing the number of effective feature points. Zhu Shukai from Shantou University used a three-color ring light source to illuminate the measured object from multiple angles, enriching the surface color information of the measured object. Combined with a 3CCD progressive scan RGB industrial camera, high-precision and rapid 3D detection of the measured object is achieved. Xie Nan from Xi’an University of Science and Technology proposed a new method for 3D reconstruction and localization combining monocular vision and LiDAR (Light Detection and Ranging) to improve the insufficient stability of single-sensor measurements and the inadequacy of LiDAR in global localization. Feature extraction is performed using the ORB corner feature extraction and RANSAC mismatch elimination methods, completing the map matching of 3D point clouds and 2D grids.

The reconstruction method after multi-sensor data fusion can obtain richer image and 3D information. Zeng Hao et al. from Nanchang Hangkong University conducted research on a 3D reconstruction method for moving targets using multi-sensor fusion, including lasers, visible light, and infrared light sensors. They constructed a laser real-time acquisition platform and data preprocessing, external parameter calibration between the camera and laser, fusion of visible and infrared images, detection of moving targets, extraction of point clouds, 3D mesh model reconstruction, and color mapping, comprehensively utilizing the advantages of each sensor to complete the detection and 3D reconstruction of targets in the scene. Jia et al. proposed a robust pattern decoding method based on the single-lens M-array mode of coded structured light, which can reconstruct target objects in a single-camera shooting mode. Deep convolutional neural networks (DCNNs) and chain sequence features are used to accurately classify pattern elements and key points, realizing the 3D reconstruction of objects.

For objects with a wide range of surface image gray levels, such as metal and oily surfaces with high reflectivity, and objects with high color contrast, there are currently two mainly used methods. One is to directly use measurement equipment such as coordinate measuring machines (CMMs), which can achieve high accuracy but are time-consuming and can only measure a small number of points, with high equipment maintenance costs. The other method, which is more widely applied, involves spraying a special powder on the surface of high dynamic range objects to improve their reflectivity, making the reflected light intensity dynamic range consistent with the camera’s sensing range, thereby better facilitating optical 3D measurement.

Currently, products manufactured based on this principle, such as 3D scanners, are available on the market, but their prices are high. Moreover, uneven powder spraying can lead to increased measurement errors, and additional processes and pollution are introduced during batch testing. Additionally, some measured objects have high surface quality requirements, so spraying their surfaces is usually not allowed, which can damage the surface. Therefore, this method also has certain limitations.

In terms of anti-noise and accuracy, monocular vision systems are inferior to binocular vision systems. However, binocular vision systems can only measure within the common field of view of the two cameras.

To address the above issues, Liu Guihua, a doctoral student at Xi’an Jiaotong University, proposed a new solution. While retaining the hardware of the binocular system, the binocular vision system is split into two monocular systems and a binocular system. The left camera and projector, as well as the right camera and projector, form two monocular systems, respectively, to compensate for data missing due to highlights, occlusion, or other reasons during single-camera shooting. Finally, the three systems are fused.

This single-binocular combined 3D measurement system fuses their respective advantages, maintaining the high accuracy and robustness of the binocular system while compensating for areas that cannot be measured by binocular vision through monocular data. Image segmentation technology is introduced to select reliable monocular data, ultimately obtaining complete surface data. The single-binocular image fusion technique has good detection effects for large-volume, complex-shaped targets but has a overly complex detection device and high detection costs for small targets.

1.3.2 Structured Light

During image matching, difficulties arise due to various factors leading to different matching discrepancies, mainly manifested as follows: 1) Grayscale discrepancies. For binocular vision, different shooting angles and varying lighting conditions on the object surface can significantly alter the grayscale values in the image, making measurements impossible or leading to low accuracy. For high-contrast or reflective materials, painting can be a solution, but it is not applicable to many objects that do not support painting. 2) Occlusion effects. When using binocular vision systems for capturing images, occlusion is inevitable, resulting in asymmetric left and right images, which increases the difficulty of matching. 3) Feature extraction discrepancies. Image features are the basis for image matching. When extracting features from the left and right images, even minor inconsistencies in the same areas can lead to differences in feature extraction, thereby complicating matching. 4) Noise. Imaging systems are rarely in an ideal state, and noise exists in every link, affecting images differently. This also impacts the accuracy of matching.

In this thesis, the research object is high-performance complex manufactured spiral bevel gear with contact patterns on their tooth surfaces. These gears are made of metal, belonging to objects with a high dynamic grayscale range. Currently, high-precision measurements, automation, and adaptability for such objects are insufficient. Therefore, there are still some problems to be solved in this research field.

Current gear measurements for spiral bevel gear mostly focus on qualitative detection of gear tooth shapes or defects. Additionally, there is often a mismatch between the tooth surface parameter measurement standards and actual detection standards. Many methods project the curved surface onto an axial plane for recognition and measurement. Researchers such as Lei Nannan, Yang Hongbin from Henan University of Science and Technology, and Yang Feng from Central South University have conducted stereo matching and 3D reconstruction on spiral bevel gear to obtain Savine information on the tooth meshing area and projected it onto the axial plane for quantitative analysis. However, the texture of spiral bevel gear is not obvious, and the grayscale variation on the tooth surface is significant, posing great challenges to 3D reconstruction and stereo matching. It is difficult to find feature points in both left and right images, and the resulting point cloud after matching is often too sparse to complete the 3D reconstruction of the entire tooth surface and contact pattern. To obtain more information, it is necessary to integrate multiple sensors, introducing multi-sensor fusion.

Currently, data fusion methods based on binocular stereo vision primarily achieve this by adding structured light projection, laser emission, etc., thereby reducing the dependence on surface texture and environmental factors, lowering the error rate of mismatching, and improving the density and accuracy of 3D point cloud data. Meanwhile, the introduction of fusion solutions can effectively address issues existing in the calibration process of current structured light systems.

Domestically, most 3D measurement instruments are still positioned in the low-end and mid-low-end markets. Existing 3D scanners mostly obtain 3D reconstructed models of objects but lack color information and cannot perform dimensional measurements. In the high-end market, especially advanced 3D optical measurement systems from Germany, the acquisition cost is extremely high. Generally, domestic small and medium-sized enterprises cannot afford such equipment, which greatly limits their development and production efficiency. Therefore, many domestic scholars have conducted in-depth research on structured light stereo measurement technology.

Researchers such as Wu Zining from the University of Electronic Science and Technology of China have proposed a dental occlusion surface 3D reconstruction technique based on binocular structured light, using a four-step ordered periodic phase-shifting algorithm to project longitudinal and transverse grating fringes onto the measured object. The absolute phase is obtained by phase-shifting, and depth calculation is performed using the SGBM stereo matching algorithm. Based on this, the absolute phase map is inverted to calculate a new cycle series, solving the stripe mutation problem in traditional phase-shifting methods. Yang Fan from the National University of Defense Technology combined a binocular system with projected speckle structured light, utilizing an improved LoG speckle detection algorithm to efficiently complete sub-pixel disparity calculations without complex global matching and optimization. This method achieves high measurement accuracy on flat surfaces but experiences reduced accuracy on curved surfaces or large-angle inclined planes. Chen Lingjie et al. from Zhejiang University of Technology proposed a binocular encoded structured light method based on codeword matching, which performs well in scenarios with insufficient color and texture. Sha Ou, a doctoral student at the University of Chinese Academy of Sciences, used coded marker points as a positioning medium and studied the influence of various structural parameters on reconstruction results using line laser binocular vision technology. Li Jing and Wang Wei from the National University of Defense Technology proposed a new method for 3D reconstruction combining binocular stereo vision with structured light encoding stripe spatial constraints. They combined epipolar constraints with encoding constraints, adopting a new search algorithm to achieve left-right view matching and calculating the depth of the target surface based on stereometric principles to obtain the 3D point cloud of the target object. Unlike conventional straight stripe projection methods, Wang Ye et al. from Sichuan University improved the circular projection profilometry using Fourier transform and achieved good full-field out-of-plane measurement results.

In the patterns projected by structured light, the standard bar graph and the phase information it carries are two-dimensional, while the surface topography of the measured object is three-dimensional. The relationship between these two types of information can be described through a system model. The essence of 3D imaging technology is to obtain 3D information through computation and processing of 2D data under known parameters. This process is called 3D reconstruction. During this process, it is first necessary to use some existing 3D data and corresponding 2D data to estimate the parameters of the established model, which is closely related to system calibration. It can be seen that system calibration and the system model are tightly connected, directly affecting the performance and accuracy of 3D imaging. Fringe projection profilometry can balance system flexibility and measurement accuracy well and is currently one of the most promising new technologies internationally.

Therefore, how to construct a reasonable system model and maximize reconstruction accuracy while improving reconstruction computation efficiency is a meaningful research topic. Coupling system model construction with fringe pattern processing and phase calculation, in addition to the independent phase recovery algorithm, can provide additional constraints for fringe pattern and phase calculations. This has great development prospects in improving fringe pattern encoding efficiency and the imaging quality of special surfaces.